So the question is no longer, “should we use AI in research?” The reality is that many of us already are.

The more important question is, “how should we work with AI as a collaborator?”

Seeing AI as a partner gives us a more grounded way to evaluate its role. AI can streamline manual work and scale our capacity, but it can miss nuance, overlook emotional cues, and sometimes nudge us toward surface-level interpretations when the problem calls for depth.

This article explores those trade-offs. It looks at where leveraging AI's strengths makes sense, where its limits become apparent, and where human judgment remains essential. Ultimately, it offers a way for teams to decide which partner should lead at different points in the research workflow.

The Important Trade-offs

The value of AI often depends on how well we understand what it does exceptionally well and where its limitations require human oversight.

AI tends to excel at tasks that are structured, repetitive, or involve processing large amounts of information quickly. It brings speed, scale, and consistency that would be time consuming and labor intensive for humans to match. These strengths make AI especially effective for reducing manual effort across many parts of the research process.

At the same time, AI has clear blind spots. It struggles with situations that depend on human interaction, judgment, and emotional understanding. For example, when analyzing a video recording, AI mainly relies on transcripts to generate summaries or surface high-level patterns, but miss subtle cues like hesitation, shift in tone, or the micro-expressions from a research session.

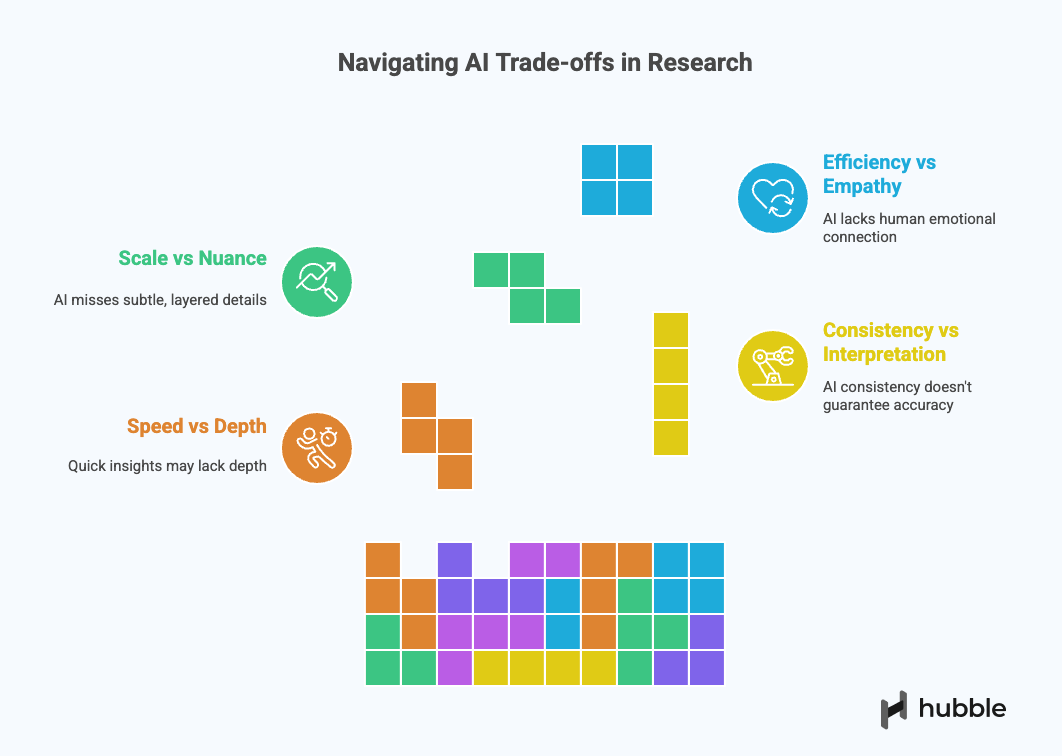

These strengths and limitations create tensions that researchers must navigate when working with AI. They tend to show up in a set of recurring trade-offs that shape how AI is used in day-to-day research work:

1. Speed vs Depth

AI accelerates research work that would normally take hours or days, such as data preprocessing, organizing large datasets, drafting summaries, or scanning transcripts. That speed is valuable, but it can come at the expense of depth.

Meaningful insights often require slowing down, revisiting raw data, and reflecting on context, contradictions, and edge cases. When speed becomes the priority, there is a risk of settling for what is quick to surface rather than what is important to understand.

2. Consistency vs Human Interpretation

AI is steady and excels at simple, repetitive procedures. It follows the structure and instructions it is given, producing consistent outputs across tasks. This reliability is useful for maintaining consistency, especially at scale.

However, consistency does not guarantee accuracy. Automated systems can introduce bias, generate misleading summaries, or confidently surface initial insights that do not accurately capture user needs or pain points. Because AI operates within the constraints of its training data and prompts, it may overlook edge cases, reinforce existing assumptions, or hallucinate patterns that appear coherent but lack grounding.

Human researchers bring judgment and intentional oversight. They validate AI-generated outputs, recognize when results feel off, and contextualize findings within the realities of user behavior and business goals. Rather than accepting AI output at face value, humans interpret, challenge, and refine it.

3. Scale vs Nuance

AI can scan through large volumes of data quickly. It can tag transcripts, cluster responses, and surface recurring patterns across many sessions in a short amount of time. This ability to operate at scale is one of AI’s biggest strengths.

Nuance, however, often lives beyond what is immediately visible in the user research data. Subtle shifts in tone, contradictions between what participants say and do, and layered motivations require interpretation and context. Human researchers are better equipped to recognize when those details matter and how they should influence the final insight.

4. Efficiency vs Empathy

AI can significantly streamline research by handling repetitive and labor-intensive tasks such as transcription, organization, and first-pass synthesis. However, it cannot build rapport, sense discomfort, or adapt to emotional signals in real time.

These human moments shape how participants engage, how deeply they reflect, and how honest or vulnerable they are willing to be. Understanding user emotions, recognizing when to pause or probe further, and knowing how to ask the right follow-up questions all require empathy, or the "human touch". In studies where trust and emotional awareness matter, efficiency alone is not enough.

Where AI Excels in the Research Process

Once the trade-offs are clear, it becomes easier to see where AI genuinely adds value. In many parts of the UX research workflow, AI functions best as a supporting collaborator. It takes on repetitive or structured work, such as planning initial drafts and early study setup, as well as time-intensive tasks like data preprocessing, transcription, and first-pass summaries. This frees researchers to focus on analyzing qualitative data, synthesizing actionable insights, and strategic decision making.

Study Planning and Early Study Setup

AI is particularly effective at helping UX researchers get started. In practice, research is rarely created from scratch. Studies are iterative, often building on prior rounds, existing business context, and familiar customer profiles. Because of this, many foundational components, such as drafting discussion guides or suggesting question phrasing, can be handled effectively with AI.

At this early stage, speed can be valuable. Shortening research cycle time helps teams move faster and allows researchers to focus on higher-value work, such as aligning with stakeholders, refining objectives, and refining the study is set up to answer the right questions.

Drafting and refining research materials

When creating surveys, interview guides, or task instructions, generative AI can quickly create alternative phrasings, improve clarity, and adapt content for different audiences. Here, AI’s role is not to decide what to ask, but to help researchers articulate what they already know more clearly and efficiently. It will still be up to the researcher to choose the appropriate research methods, prioritize key research goals, and communicate with the stakeholders.

Transcription and Data Preparation

One of AI’s strongest contributions is removing manual overhead. Transcribing sessions, cleaning transcripts, and organizing raw data are time consuming but necessary steps in any research projects. AI handles these tasks reliably and quickly, giving UX researchers faster access to usable data points without sacrificing accuracy.

This is often where teams feel as the most huge time saver. Depending on the type of data being collected, AI can support preparation in different ways.

For qualitative research, especially video or interview-based studies, AI can generate context-aware transcripts with speaker identification and timestamps. Many AI-powered tools also provide initial summaries that help researchers quickly orient themselves before diving deep into the raw footage to assist with qualitative analysis.

For quantitative data, AI can assist with early-stage data preparation by identifying incomplete or inconsistent entries, highlighting relevant columns or segments, and generating preliminary descriptive statistics. While this does not replace rigorous analysis, it helps researchers focus their attention on the parts of the dataset that matter most.

In both cases, AI accelerates the path from raw data to analysis, allowing researchers to spend less time preparing data and more time making sense of it.

Moderating Structured Studies and AI Moderators

AI performs well in research contexts that are clearly defined and predictable. AI can guide participants through structured tasks without introducing unnecessary variability that might skew results.

As AI capabilities continue to evolve, AI-moderated interviews have emerged as a viable option for user interviews and task-based sessions. When designed thoughtfully, these approaches allow teams to increase sample sizes while maintaining a consistent experience across participants. Used this way, AI moderation helps balance scale and quality.

First-pass Analysis and Pattern Detection

AI is well suited for scanning large datasets and surfacing high-level patterns. It can cluster responses, tag themes, summarize recurring topics, and highlight areas of alignment or disagreement across participants. This does not replace synthesis, but it gives researchers a structured starting point before the extensive analysis.

When used thoughtfully, AI shortens the distance between raw data and research findings.

That said, first-pass analysis does not mean blindly feeding all data into AI. Considerations around data privacy, security, and research rigor still apply. More importantly, AI-generated patterns should not be mistaken for final insights. Without human interpretation, nuanced context and actionable insights can be lost.

In practice, AI works best here as an assistant. Especially when teams are time constrained, it helps researchers get oriented quickly, identify promising directions to explore, and decide where to invest their analytical effort next.

Support with Reporting

AI can also help researchers communicate insights more efficiently. Drafting summaries, organizing findings into themes, and outlining reports or presentations are all areas where AI can support structure and clarity. Researchers still decide what matters most, but AI helps shape how insights are presented.

In this stage, AI supports storytelling without owning the narrative. It acts as an assistant or co-pilot that can connect dots across the data, helping researchers translate complex findings into clearer, more cohesive outputs.

Where Human Touch Still Remains Valuable

As outlined earlier, most effective uses of AI in UX research focus on reducing manual, repetitive tasks, such as drafting from prior study iterations or handling data transcription. Even when AI supports analysis, its role is typically limited to preliminary pattern detection. Our oversight remains essential to validate outputs, interpret meaning, and ensure insights are grounded in real user behavior.

Ultimately, the core responsibility of research still sits with the human researcher. Making sense of data requires time, judgment, and deliberate synthesis. It involves sitting with raw inputs, organizing them thoughtfully, identifying patterns, and deciding how findings should shape decisions. AI can assist along the way, but it does not replace this work.

Study Execution

For user testing that require live moderation, human researchers still need to lead. This is especially true for usability testing, where effective facilitation depends on interpreting visuals, interactions, and moments of confusion, not just listening to spoken responses. Knowing when to pause, probe, or redirect requires real-time judgment that AI cannot reliably replicate.

In these settings, consider its role as an AI assistant. While the researcher focuses on moderating the session, guiding participants, and responding to what unfolds, AI can operate in the background with AI-powered transcription, capturing notes, and time-stamping key moments that may be worth revisiting later. This division of labor allows researchers to stay present in the session without worrying about missing details.

Exploratory or Generative Research

When the goal is to uncover unmet needs, mental models, or emerging behaviors, conversations rarely follow a predictable path. Skilled researchers rely on intuition, contextual understanding, and experience to recognize when a moment deserves deeper exploration. The difference between a strong and a less experienced researcher often lies in the ability to ask revealing follow-up questions that surface meaning, not just responses.

AI performs best when structure is present. In open-ended discovery work, its limitations become more visible. Without the ability to interpret context or adapt questioning dynamically, AI-led approaches can result in shallow follow-ups or missed opportunities to explore what truly matters.

High Stakes & Strategic Research

Research that informs pricing, product direction, or long-term strategy carries significant consequences. Small misinterpretations can cascade into large downstream decisions. In these contexts, insight quality depends on careful interpretation, triangulation across multiple data sources, and a holistic understanding of stakeholder and business context.

AI can assist with analysis, but it should not be the primary driver of conclusions when the cost of being wrong is high. While AI can surface patterns or summarize findings, organizations do not make strategic decisions based solely on AI generated insights. Human researchers are responsible for validating hypothesis, stress-testing assumptions, and ensuring that insights are grounded from customer feedback before they influence strategy.

Complex Workflows and Domain-heavy Contexts

In industries such as finance, healthcare, or enterprise software, user behavior is shaped by regulation, policy, and specialized domain knowledge. AI can struggle in these environments, where terminology is nuanced and workflows are tightly constrained. It may oversimplify processes or misinterpret behaviors without understanding the underlying rules that govern them.

This becomes especially apparent in usability studies or prototype testing that involve behavioral data. The work is not just about interpreting spoken user feedback, but about observing interactions, understanding interface complexity, and identifying friction points that emerge through behavior rather than language alone.

Human researchers bring domain fluency and the ability to challenge assumptions. This contextual understanding is critical for preventing misleading insights.

Final Synthesis & Decision Making

Synthesis is where meaning is created. It requires prioritization, judgment, and the ability to connect research findings to broader business goals. While AI can support synthesis by organizing data or highlighting surface-level patterns as much with deliberate prompt engineering, it cannot determine which insights are most impactful or how they should influence decisions.

That responsibility sits with the UX professionals. Deciding what matters most, weighing evidence, and prioritizing insights based on impact, risk, and feasibility are inherently human tasks.

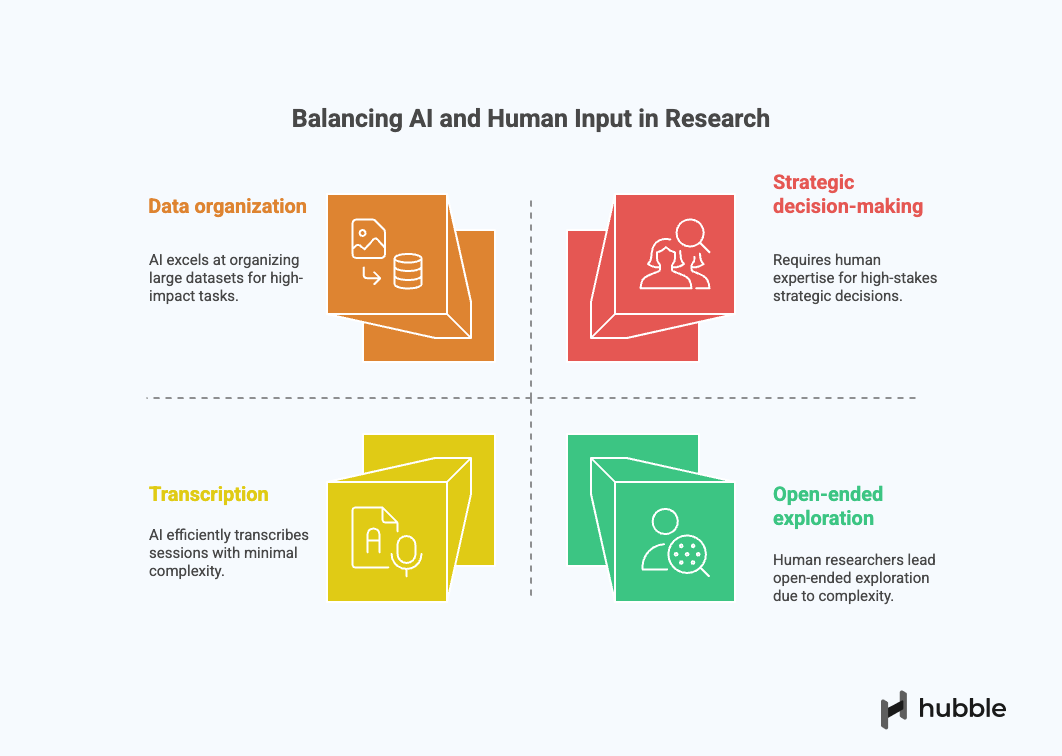

A Practical Framework for Balancing AI and Human Input

Once AI is treated as a collaborator rather than a replacement, the goal shifts from maximizing automation to making deliberate choices. A practical research practice to strike that balance is to evaluate each stage of the user research process through a few guiding questions.

1. Is the task structured or interpretive?

AI performs best when the task is structured, repeatable, and well defined, such as drafting from existing inputs, transcribing sessions, or organizing large datasets.

Tasks that require layers of interpretation, or sense-making should remain human-led.

2. What is the cost of getting it wrong?

When research informs high-stakes decisions like pricing, product direction, or long-term strategy, the tolerance for error is low.

In these cases, AI should support analysis, not drive conclusions. Human researchers are responsible for validating outputs and stress-testing insights before they influence decisions.

3. Does the work require real-time adaptation or domain knowledge?

If conducting research involves facilitation, understanding nuanced or complex concepts, or open-ended exploration, human researchers should lead.

In domain-heavy contexts such as finance, healthcare, or enterprise systems, understanding regulations, constraints, and workflows is essential. Human expertise helps prevent oversimplification and misinterpretation that can arise from automated analysis.

4. Are we validating or generating insights?

AI can help validate existing hypotheses by scanning for patterns or summarizing known themes. Generating new insights, especially those that challenge assumptions, still requires human creativity and interpretation.

Balancing AI and human judgment is not a one-time decision. It is an ongoing practice that evolves as tools improve and research questions change. When UX teams are intentional about this balance, AI becomes a powerful collaborator.

What Human-AI Collaboration Means for the Future of UX Research

As AI becomes more embedded in AI-UX research tools and workflows, it is reshaping how UX researchers work. The future of UX research is less about manually executing every step and more about guiding strategy, making sense of insights, and ensuring research informs decisions continuously throughout the product development lifecycle. By shortening research turnaround time, AI enables research to move closer to the pace of product development, allowing teams to learn more frequently.

AI will continue to take on more of the operational load. It will help researchers move faster, process more data, and reduce friction across the research lifecycle. These gains are real, and they matter. They give teams the capacity to run more studies, learn more frequently, and respond more quickly to change.

But the value of research has never come from speed alone.

Human researchers bring intuition, empathy, and curiosity. They ask why a pattern matters, not just whether it exists. They recognize when an outlier signals a careful review. They understand organizational context, ethical responsibility, and the downstream impact of decisions informed by research.

In practice, this means being deliberate. Delegating structured and repetitive work to AI. Staying closely involved where nuance, emotion, and judgment matter. And continuously revisiting those boundaries as tools evolve and research questions change.

FAQs

Yes, but intentionally. AI is already part of many UX research workflows, whether through drafting, transcription, analysis, or moderation. The key is not deciding whether to use AI, but understanding where it adds value and where human judgment should lead.

AI works best as a collaborator that supports structured, repeatable tasks rather than replacing critical thinking or interpretation.

Not necessarily. Research quality depends on how AI is used, not whether it is used. When AI supports structured tasks like transcription, summarization, or pattern detection, it can improve efficiency without compromising rigor. Quality issues tend to arise when AI replaces human judgment in areas that require context, empathy, or interpretive decision making.

AI is effective in areas that are structured and time intensive, such as drafting research materials, transcribing sessions, organizing large datasets, detecting high-level patterns, and supporting reporting. These tasks benefit from speed and consistency, allowing UX researchers to spend more time on synthesis, decision making, and stakeholder alignment.

Teams can evaluate each research step by asking whether the task prioritizes speed over depth, structure over exploration, or efficiency over empathy. If nuance, emotional awareness, or ethical responsibility are central to the outcome, human leadership is essential.

In many cases, the most effective approach is a hybrid one, where AI supports execution and humans own interpretation and decisions.