User research is changing, fast, as AI seeps into every part of the workflow. With releases like GPT-5 and other advanced models, what felt novel a few months ago can feel outdated today. The baseline keeps rising, and so do expectations for what research should deliver.

This shift raises a hard question for every UX practitioner. What does research look like when AI and agentic systems can automate the busywork and even attempt parts of the thinking? Where do we add the most value when speed is easy to buy?

Teams want velocity, while good research asks for depth. Automation can summarize, while human nuance gives meaning. Research methods like interviews, surveys, and usability tests still reveal the why behind behavior, yet they bring familiar constraints: limited bandwidth, time-consuming facilitation and analysis, siloed insights, and small samples that do not scale easily.

AI agents are ushering in a transformative shift by not only accelerating research, but changing how it is done. We are at an inflection point where AI is becoming key part of the product experience itself and user research must also study how AI thinks and behaves in the hands of the customers. AI is not replacing researchers, but it is reshaping how products are built and how research contributes.

In this article, we will look at the current state of practice, the gains and trade-offs across common methods, and where AI can raise speed, quality, and scale without losing the judgment that makes research valuable.

The Current State of UX Research

User research remains the backbone of product decisions as the tooling keeps getting better. Many teams report growing demand for research year over year fueled by need for continuous discovery and research democratization (yet there is a caveat).

Types and Stages of Research

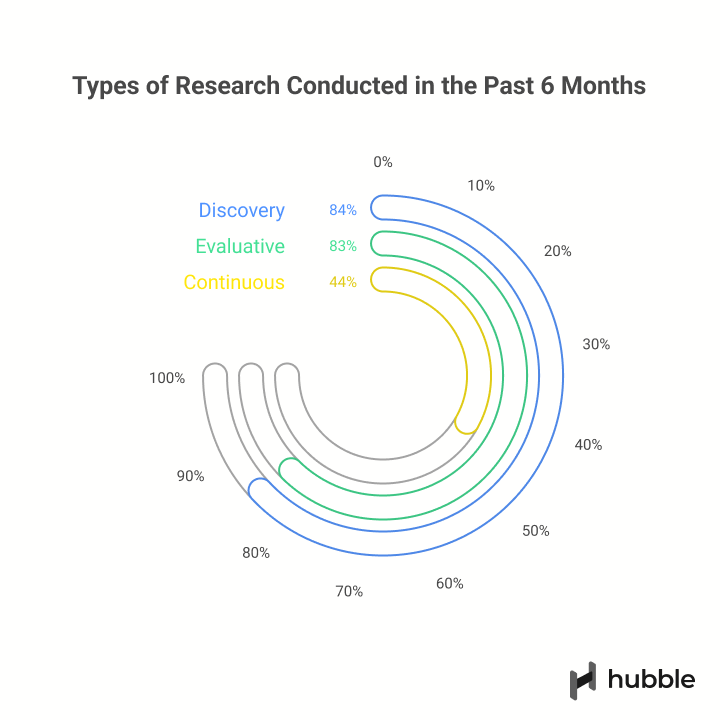

Recent 2024 industry reports suggest that a large majority of teams run both discovery and evaluative work, and a meaningful share run continuous research. 81 percent of respondents ran a mix of discovery and evaluative work, and 44 percent ran continuous research over the past six months.

In practice, most decisions still lean on discovery, which makes sense as it defines the problem space, clarifies customer needs, and surfaces pain points that shape the roadmap.

"Skip discovery and you are guessing. Discovery helps us frame the problem, understand our customers, and surface pain points that guide the roadmap."

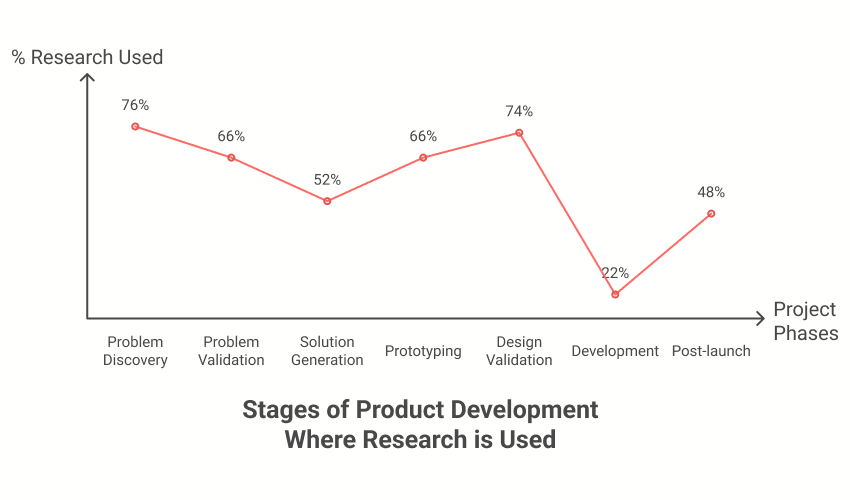

According to the report, research is woven into every stage of the product development cycle, but it is most often applied during problem discovery (76%) and design validation (74%). These are the moments when teams are diverging, exploring the unknowns, mapping the problem space, and grounding ideas in real user needs before converging on solutions.

Research Methods

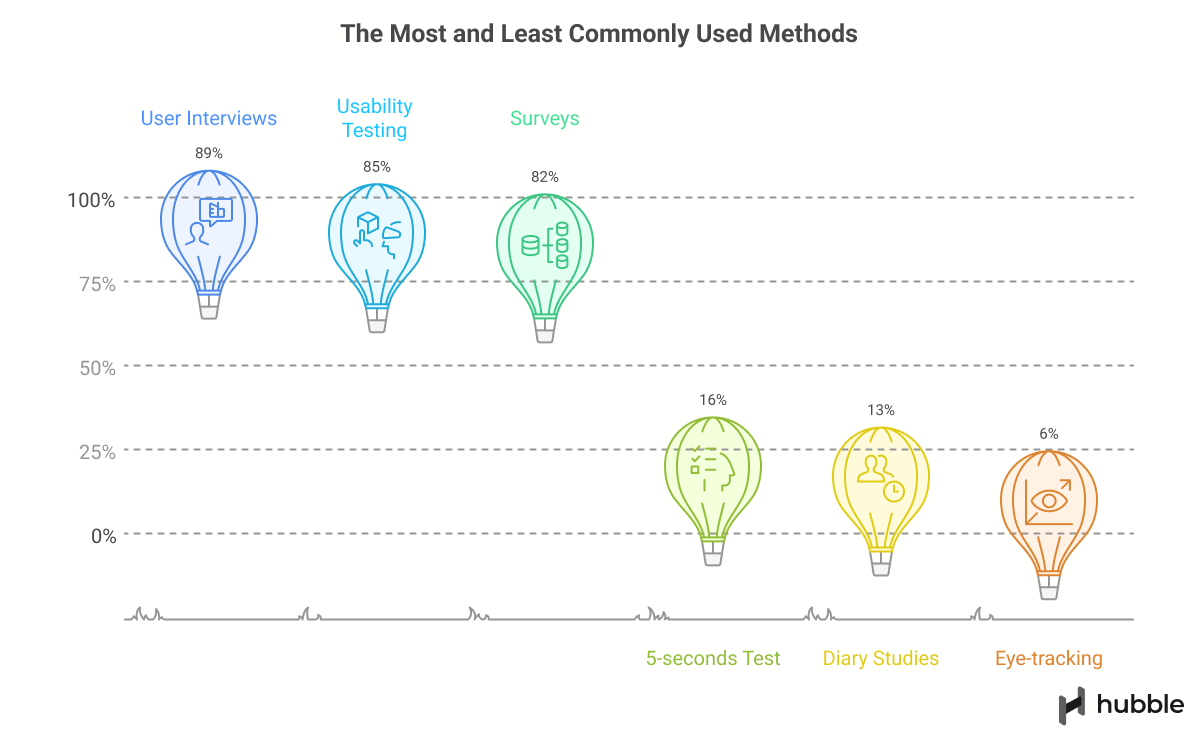

The core toolkit is steady. Surveys, in-depth interviews, and usability testing remain the most used methods across teams. They serve the purpose, accessible, and they map well to common product questions.

At the same time, usage drops off for more specialized methods like five-seconds tests, diary studies, and eye tracking. The reasons are predictable, since they require more expertise, time, and deliberate setup, and many teams have fewer chances to apply them.

Sticking with the big three is not a problem by itself as it shows they still deliver value. Nevertheless, it is a missed opportunity when how we conduct research hasn't changed over time and broadened.

Taken together, the picture is clear. Research demand grows each year, but teams do not have the time or muscle to match it. The core toolkit still carries the load, while specialized methods sit on the shelf being less utilized. In light of AI potentially touching many parts of the research process, researchers can shift attention from chores to creativity, from collection to interpretation and decisions.

Challenges in Current Research Practices

Even with better tools and a growing appreciation for the value of research, many teams still face familiar roadblocks. Practitioners widely agree that the UX research process leaves room for improvement. Today’s approach is still largely manual, requiring expertise and hands-on oversight at each critical stage, and yet it is often carried out under tight timelines and with limited resources.

1. Slow, resource-heavy processes

Recruiting participants, running sessions, and synthesizing research findings all take time. This makes it difficult to run research at the pace most product teams now operate, especially in agile environments where priorities shift quickly.

According to dscout's 2021 study, a research project typically takes 42 days, with discovery research taking as nearly twice as much (60 days) than evaluative research (28 days).

2. Scaling without losing quality

Scaling moderated research is inherently difficult as sessions are resource-intensive and demand skilled facilitation. To cover more ground, UX researchers often complement them with unmoderated studies or surveys to triangulate research data.

While these methods help with reach, they can introduce noise or unreliable data, especially when participant engagement drops or responses are influenced by misunderstanding the task. This can leave researchers juggling higher volume but lower quality inputs.

"We get over 600 responses in a few days, but it told us very little about why conversion dropped."

One of the main drawbacks of surveys is that they rarely capture the full context or nuance behind responses, since they are primarily designed for quantitative data collection. In many cases, more than half of the data end up unusable because the data is incomplete, inconsistent, or lacks the detail needed to draw meaningful conclusions.

3. Democratization without UX maturity

UX research democratization has real benefits, empowering non-researchers to run their own studies and reducing bottlenecks. But it also comes with risks, especially when the organization’s UX maturity is low. Without clear frameworks, training, and oversight, democratized research can lead to inconsistent methods, weak data, and poor decision-making.

One product designer described the challenge bluntly:

“I feel like my design superiors expect me to ‘just know’ how to do UX research, and to make it an integral part of every project.”

In practice, it often falls to trained researchers to ensure that non-researchers are running studies correctly. At Hubble, we’ve seen this firsthand. Many designers and PMs take the lead on research, but still need a second set of eyes to make their work robust. The gaps can be subtle but consequential. Avoiding leading questions in an interview, writing screener questions that effectively filter participants, or designing surveys that capture actionable insights rather than dead-end data.

When these details slip through, the result is the same: time is spent collecting data that will never lead to meaningful outcomes. Democratization works best when paired with organizational commitment to research quality, not as a shortcut to bypass trained expertise.

4. Limited reach in participant pool

Many UX researchers and teams still draw from small, convenient samples, often relying on email lists as their main recruiting channel. The biggest bottlenecks in this process are finding qualified participants—especially in niche B2B spaces where the target audience is small—and the sheer time required to recruit.

Defining participant criteria, writing and setting up screeners, and coordinating outreach can take days or even weeks before a single session is run. 36.3% of respondents alluded to recruitment process to be one of the biggest source of project delays.

5. Bottlenecks in data analysis

Collecting data is relatively straightforward compared to analyzing qualitative data and uncovering patterns. Transcribing interviews, coding qualitative user feedback, and mapping user insights to product decisions often take more time than anyone budgets for.

Numbers from dscout make this clear: 63.1% of UX researchers said they had "just enough time" for their most recent project, while over half (51%) said they needed more time specifically for analysis.

Reviewing moderated session recordings is a prime culprit. One hour of footage can require several more to watch closely, take detailed notes, and distill patterns. As a result, teams often feel pressure to rush or skip parts of the analysis process, which risks losing detailed nuance.

The cost of cutting corners is high. In the same survey, 76.9% of researchers admitted that when time ran short, "the full extent of insights were not mined and translated." In other words, valuable signals were left behind.

AI Breakthroughs: Closing the Gap Between Depth and Speed

What’s missing in the current user research market is a truly scalable and adaptive way to capture both depth and speed in insights without the heavy manual lift. While there are plenty of tools for surveys, prototype tests, and schedule interviews, most still rely on rigid flows or human moderation.

This forces researchers into a trade-off: choose lightweight methods that sacrifice context (such as unmoderated tests or surveys) or rich methods that are slow and resource-heavy (like moderated user interviews).

The real opportunity is in building a system that can actively engage with users in real time, ask context-aware follow-up questions, and synthesize valuable insights instantly—blending the depth of a skilled researcher with the scale and efficiency of software.

Recent AI breakthroughs make this vision far more realistic. Large language models can now:

- Run adaptive conversations that change course based on a participant’s answers.

- Automate analysis by transcribing, coding, and clustering themes within minutes.

- Synthesize mixed data from qualitative and quantitative inputs into coherent, actionable narratives.

Together, these capabilities can turn research into a continuous, self-improving process that can scale to hundreds of participants, and frees human researchers to focus on strategic thinking and decision-making.

AI in Current User Research

AI-powered research tools are becoming increasingly common, with many platforms now introducing AI-driven features to streamline different stages of the research process.

When AI first entered the conversation, there was skepticism, especially among experienced researchers, about its value. The prevailing view was that user research is inherently about direct, human-to-human conversations and building empathy with customers, something machines couldn’t replicate.

However, in recent years, we’ve seen a shift. AI is no longer positioned as a replacement for human connection, but rather as an enabler across multiple touchpoints in the research workflow. From transcription and coding, to real-time moderation and synthesis, AI is finding a role in reducing repetitive tasks and expanding researchers’ capacity for strategic thinking.

Between 2023 and 2024, adoption rates grew significantly. The share of survey respondents who said they actively use AI for their work jumped from 20% to 53%, and that number is expected to rise in the coming years. In Maze’s 2024 report, 56% of experts said AI helped improve team efficiency, while 50% reported reduced research turnaround times.

With more powerful AI models and APIs entering the market, a wave of new applications is emerging: synthetic users for simulated feedback, AI moderators that guide unmoderated interviews, AI-powered analysis that surfaces patterns instantly, and generative insight summaries that save hours of manual synthesis.

The trend is clear with AI shifting from a novelty to an integral part of modern research workflows, helping teams scale, move faster, and focus more of their energy on meaning and decision-making.

The Next Era of UX Research

For decades, user research has relied on the handful of methods, each with trade-offs that limit either depth or scale. Processes remain manual, recruiting is slow, and analysis often takes longer than the studies themselves. Even with better tools, the fundamentals have not shifted until now.

AI offers a way forward. By blending real-time engagement, intelligent follow-ups, and instant synthesis, it can close the gap between rich qualitative insight and scalable quantitative reach. This shift will turn research from occasional projects into a continuous feedback loop that is more reliable.

FAQs

AI can handle repetitive, time-consuming tasks like participant recruitment, transcription, and initial data coding, freeing researchers to focus on interpretation and human connection. By using AI as an assistant rather than a replacement, teams can preserve empathy while gaining efficiency.

AI is being used for participant recruitment, sentiment analysis, automated transcription, summarizing qualitative data, generating research reports, moderating unmoderated interviews, and even simulating user personas for rapid concept testing. These use cases help speed up the process while keeping researchers focused on interpretation and decision-making.

Traditional methods often force a trade-off between speed and depth. Surveys and unmoderated tests can scale quickly but lack context, while moderated interviews provide nuance but are slow and resource-heavy. Recruiting, running, and analyzing studies remain manual bottlenecks.

No. AI is more likely to augment research than replace it. The human role shifts toward framing the right questions, interpreting findings within context, and translating insights into product strategy—areas where human judgment is critical.