User interviews are one of the go-to methods in UX research because they allow product teams to tap into rich qualitative data that uncovers user needs, motivations, pain points, and workflows—insights that analytics alone can’t provide. Traditionally, researchers have relied on two main approaches: human-moderated interviews, where a researcher guides the conversation in real time, and unmoderated think-aloud sessions, popularized by research platforms, where participants complete tasks independently while verbalizing their thoughts.

With the rise of artificial intelligence, a third option has emerged: AI-moderated interviews. Powered by natural language processing and automation, these interviews promise scalability and efficiency while still capturing conversational depth, with AI dynamically guiding the discussion. This evolution raises an important question for researchers and product teams: How do AI interviews compare to human-moderated and unmoderated research, and when should each be used?

This guide serves as a foundational piece to answer that question. We’ll break down what each method involves, highlight their strengths and weaknesses, and provide a side-by-side comparison to help you choose the right approach for your research goals.

What Are AI Interviews?

An AI interview is a new research method where participants interact with an AI moderator instead of a human researcher. Leveraging rapidly evolving natural language processing (NLP) and AI models, the AI facilitates the conversation by asking key questions and anchoring onto participants’ specific experiences to generate relevant follow-ups questions.

The goal is to capture the richness of a live interview without the operational overhead of managing schedules, coordinating calendars, or handling last-minute cancellations, which often drain time and resources in traditional moderated studies.

Unlike unmoderated studies or surveys that rely on a fixed set of questions, AI interviews can adapt dynamically. For example, if a participant expresses frustration with a checkout flow, the AI can immediately ask clarifying questions instead of sticking rigidly to a script. Beyond facilitation, many platforms now integrate transcription, annotation, and AI-powered synthesis, enabling teams to move quickly from raw input to actionable insights.

AI interviews are not designed to replace human researchers. Instead, they serve as a middle ground between human-moderated sessions and unmoderated tests. By leveraging AI, interviews become more scalable and efficient than live sessions, while remaining more conversational and exploratory than unmoderated testing.

How Do AI Moderated Interviews Work?

At first glance, AI moderation may feel theoretical, but how practical is it for an AI agent to facilitate a conversation in real time? With the right setup, AI interviews can balance quality and scalability. While implementation may differ across AI-powered tools, here’s how Hubble optimizes the process.

1. Research Setup

AI interviews begins with the researcher configuring goals, context, and a discussion guide. These inputs act as guardrails so that the AI stays aligned with study objectives.

- The agent is primed or prompted with instructions on what to ask, when to probe, and how to adapt its flow without going off-track.

- Additional parameters, such as interview length and a fully customizable discussion guide, allow you to fine-tune the model so it closely reflects your research objectives.

2. Participant Interaction & Dynamic Probing

Once a study is live, participants join the session asynchronously. Instead of a live moderator, they see a disclaimer and simple instructions before interacting with an AI agent that poses questions, listens to answers, and adjusts follow-up questions in real time.

The real strength of AI moderation lies in its ability to probe dynamically. If a participant mentions frustration, the AI can immediately ask clarifying questions to uncover the “why” behind the pain point, something static surveys can’t achieve.

3. Data Capture & Analysis

Multiple sessions can run in parallel, making the approach highly scalable. Each session is recorded, transcribed, and organized into digestible outputs.

Synthesis becomes faster with AI-powered summaries that surface key quotes, themes, and qualitative insights, providing a strong first pass and reducing time to insight.

Human vs. AI Moderated Research

There is still debate and some skepticism around using AI to conduct research. Yet with the right setup and guardrails, AI can complement traditional, manual research processes and gradually establish itself as a novel approach to conducting interviews.

1. Lowering the Entry Barrier

User interviews have long carried the perception of a high entry barrier especially when teams are just getting started. Teams often assume you need to be a trained researcher to ask the right questions, or designers may feel too nervous to speak directly with customers while still extracting meaningful insights.

AI interviews lower that barrier, allowing product teams, especially those moving fast but lacking dedicated research infrastructure, a way to run focused, well-scoped qualitative research without requiring interviewing expertise.

2. Trade Off Between AI and Human Moderator

There are clear trade-offs when comparing AI and human-moderated interviews. AI can overcome some of the practical limitations of manual research methods, such as scheduling complexity, limited scalability, and the time required for live facilitation and synthesis.

Nevertheless, AI moderators cannot fully replace the richness of live interaction. Human moderators can read facial expressions, body language, and subtle emotional cues, then adapt their follow-up questions in the moment.

Human researchers also benefit from rapport and vulnerability. A skilled user researcher can strategically ask for help: "I really need your honest feedback to better understand this experience." This vulnerability often encourages participants to open up and contribute more proactively.

At the end of the day, user research is deeply human work. Direct touchpoints with customers not only generate valuable insights but also help close the gap between product teams and the customers they serve, especially when non-researchers are actively involved throughout the process.

3. Disclosing Personal Information with AI

The dynamic between AI and participants introduces another layer worth watching. Early studies suggest some people feel more comfortable sharing personal or sensitive information with an AI than with another human (similar to how many use AI for mental health check-ins or private journaling). However, this behavior can vary with cultural nuances and context. Thus, researchers must keep in mind who their target audience is when designing AI-facilitated studies.

"The more functional or emotional conversation a user makes with AI agents, the more likely the user discloses his/her information to the devices"

Below, we look at side-by-side comparison between AI, human, and unmoderated tests.

Comparing AI-Moderated, Human-Moderated, and Unmoderated Testing

Consider AI-moderated customer interviews as a bridge between traditional human-facilitated, in-depth interviews and unmoderated tests. They’re more scalable than human moderation while remaining more dynamic and conversational than unmoderated testing.

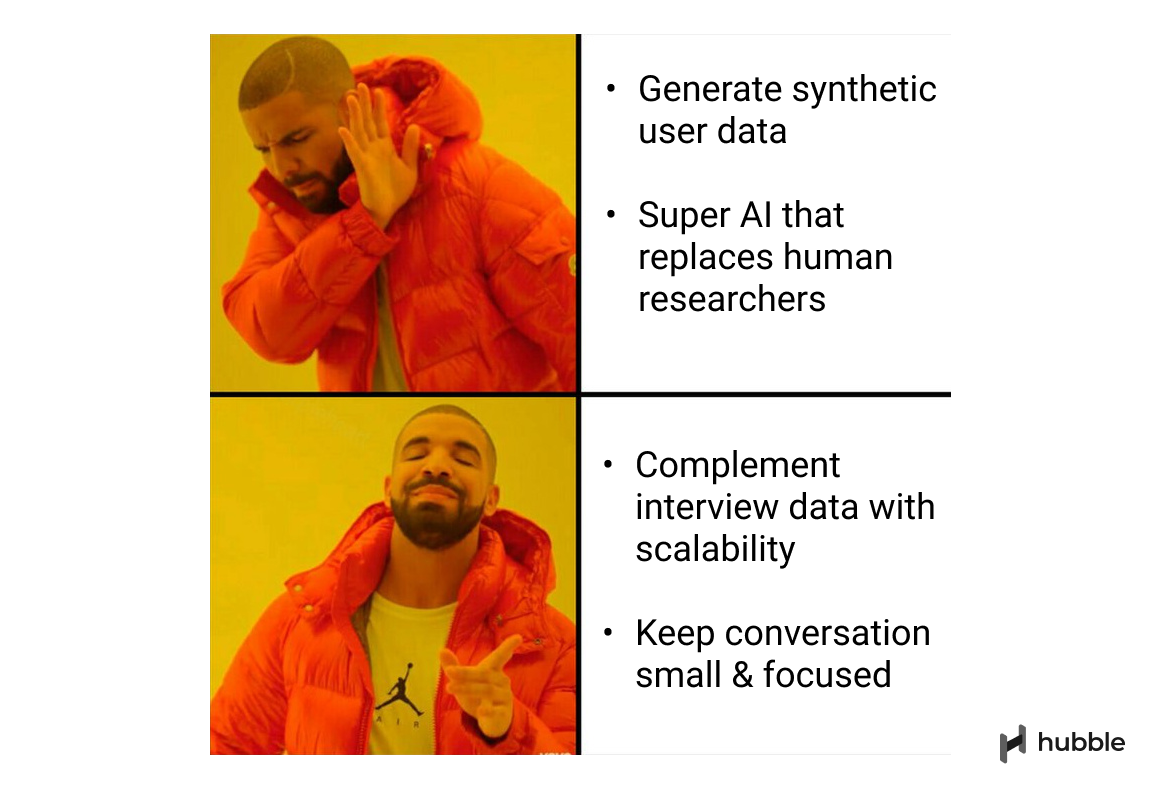

By now, you have a good sense of what an AI interview is. Just as important is understanding what it isn’t:

- Not a synthetic user: It doesn’t generate fake responses or synthetic data; it facilitates real conversations with real participants.

- Not a researcher replacement: It's not a “super AI agent” meant to replace human researchers, but a tool to assist and scale research.

What Makes Hubble’s AI Interviews Powerful

1. Modular, Focused Conversations

Instead of asking the AI to run one long 30-minute conversation, which can easily drift off topic, Hubble breaks the interview into modular tasks. Each task has a clear scope or learning objective, so the AI stays focused while still having the full context of previous tasks. This structure keeps conversations relevant and prevents the AI from wandering into unrelated areas.

2. Context-Driven Customization

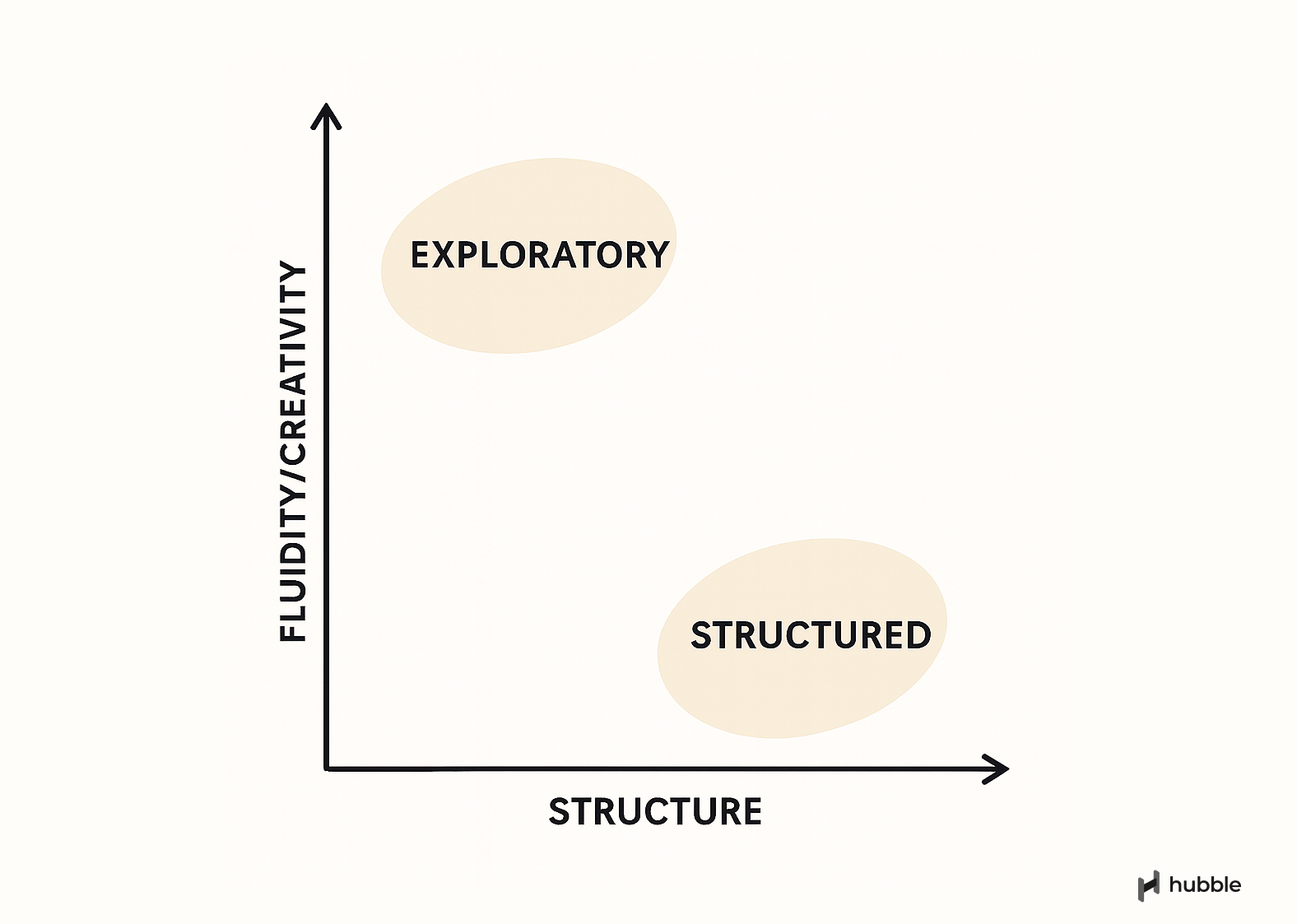

Hubble lets you give the AI guardrails so it understands your research needs and what insights need to be extracted. Where you are in the product development cycle shapes your approach. Sometimes you’ll have a clear list of research questions and hypotheses to validate; other times, only high-level goals. Either way, the AI can adjust and run the interview effectively.

You can add a fully customizable discussion guide to shape the conversation. Even high-level instructions can lead to natural, insightful dialogue with the customers.

- Open-ended & Unstructured: If you’re in discovery mode, you can provide just a few broad questions. The AI will fill in gaps, ask creative follow-up questions, and anchor to what participants share.

- Semi-structured: With only a handful of key prompts, the AI can balance consistency and adaptability — sticking to the essentials while probing deeper where it matters.

- Structured: If you have clear hypotheses or questions to validate, you can give the AI a detailed script. It will follow closely to ensure consistency across sessions.

3. Visual Stimuli & Prototype Feedback

Hubble also supports testing images and visual assets along with the AI facilitation. You can upload static screens, quick prototypes, or marketing visuals and let the AI probe participants about first impressions, clarity, discoverability, or other design attributes.

Our help article on setting up AI interviews show detailed steps on how it's implemented.

Implementing AI Moderation for Research

With the right setup and guardrails, AI moderated interviews can lower the barrier to qualitative research, help teams move faster, and scale discovery work that would otherwise stall due to limited researcher bandwidth.

Human-moderated interviews remain the gold standard for deep, nuanced understanding. They allow moderators to read subtle cues, build rapport, and adapt in ways AI still can’t match. Unmoderated think-aloud testing remains the fastest, cheapest choice when you need quick usability feedback at scale.

AI moderation sits in the middle: more scalable and efficient than live interviews, yet more adaptive and conversational than unmoderated testing. It’s a practical bridge, especially for teams without dedicated research infrastructure or for researchers looking to expand reach without sacrificing too much depth. Blending these approaches can give product teams both speed and confidence.

FAQs

An AI-moderated interview is a research method where an AI agent, asks participants questions, and dives deep based on their responses. It’s designed to make qualitative interviews more scalable and efficient while still capturing conversational depth.

Use AI-moderated interviews when you need to scale early discovery research, run many sessions quickly, or lack researcher bandwidth. They’re ideal for exploring new product areas, testing early concepts, and gathering qualitative feedback at speed without heavy logistics.

Human-moderated interviews offer rich nuance, empathy, and real-time adaptability, but they require scheduling and live facilitation, which are time consuming. AI-moderated interviews remove most of that overhead, can run asynchronously at scale, and adapt follow-up questions dynamically.

No. AI interviews are a complement, not a replacement. They lower the barrier to qualitative research and save time, but human moderation is still unmatched for sensitive topics, complex workflows, and reading nonverbal cues.

.png)