Hubble is a unified user research software for product teams to continuously collect feedback from users. It offers a suite of tools including contextual in-product surveys, usability tests, prototype tests and and user segmentation to collect customer insights across different stages of product development.

Hubble allows product teams to drive decisions based on user-driven data by streamlining your research from study design, participant recruitment, study execution, data analysis, and reporting. Our goal is to help you establish a lean UX research practice to test your design early and regularly to hear from your users.

Follow our blog for curated articles and guides on different UX research topics to kickstart your next user research project.

Common Use Cases

Whether you're exploring user needs during discovery, validating designs in prototyping, or gathering feedback post-launch, Hubble makes it easy to reach the right users, ask targeted questions, and turn responses into actionable insights. Below are common use cases we see customers using Hubble for:

Discovery Research

- Run discovery surveys and in-depth interviews to uncover user pain points, unmet needs, and behavioral patterns.

- Scale qualitative and early discovery research with AI-moderated interviews.

- Explore early-stage problem spaces through either moderated or unmoderated interview-style surveys or open-ended prompts.

- Trigger contextual in-product intercepts to collect real-time feedback or bug reports while users are actively exploring your product.

- Conduct card sorting to explore how users naturally categorize information and features for shaping navigation and IA early.

Ideation

- Test multiple design concepts to gauge which direction resonates best with users before moving into high-fidelity design.

- Conduct preference or first impression testing to evaluate emotional response, clarity, and appeal of early visuals or messaging.

- Run iterative prototype tests to identify usability issues, gather user reactions, and refine interactions in low-to-mid fidelity prototypes.

Post-Launch & Iteration

- Run usability testing on live products (web or mobile) to catch friction points or broken flows in real user environments.

- Conduct A/B testing with follow-up surveys to measure user perception and satisfaction between design variants.

- Re-evaluate site navigation or IA through tree testing or card sorting to improve content discoverability post-launch.

- Deploy contextual surveys at key moments (e.g., after onboarding or checkout) to gather feedback and measure satisfaction.

- Monitor product changes over time by regularly pulsing users for input during each iteration cycle.

In-depth Interviews

Moderated research in Hubble lets you run live sessions with participants to converse naturally, observe their behavior, gather real-time feedback, and uncover rich qualitative insights.

With Hubble’s end-to-end moderated research workflow, you can easily create a moderated research project, set your availability, recruit and schedule participants, and handle incentive payouts. It’s designed to simplify the logistics so you can focus on meaningful conversations and insights.

- Another option to scale your research effectively is to leverage AI interviews, in which AI naturally converses and explores study topics with participants.

Concept and Usability Testing

Hubble supports Figma to directly import prototypes for user testing. To learn more about step-by-step guide on importing prototypes into unmoderated studies, refer to this help article. To optimize prototype loading, we recommend linking a Figma file that contains only the designs being evaluated. Large file sizes may affect loading performance.

To import a Figma prototype to your unmoderated study, you first need to add a Prototype Task to the study flow. In the rendered preview section of the screen will ask for a URL to your prototype. We encourage testing prototypes early on in the design process regardless of the fidelity of the prototype.

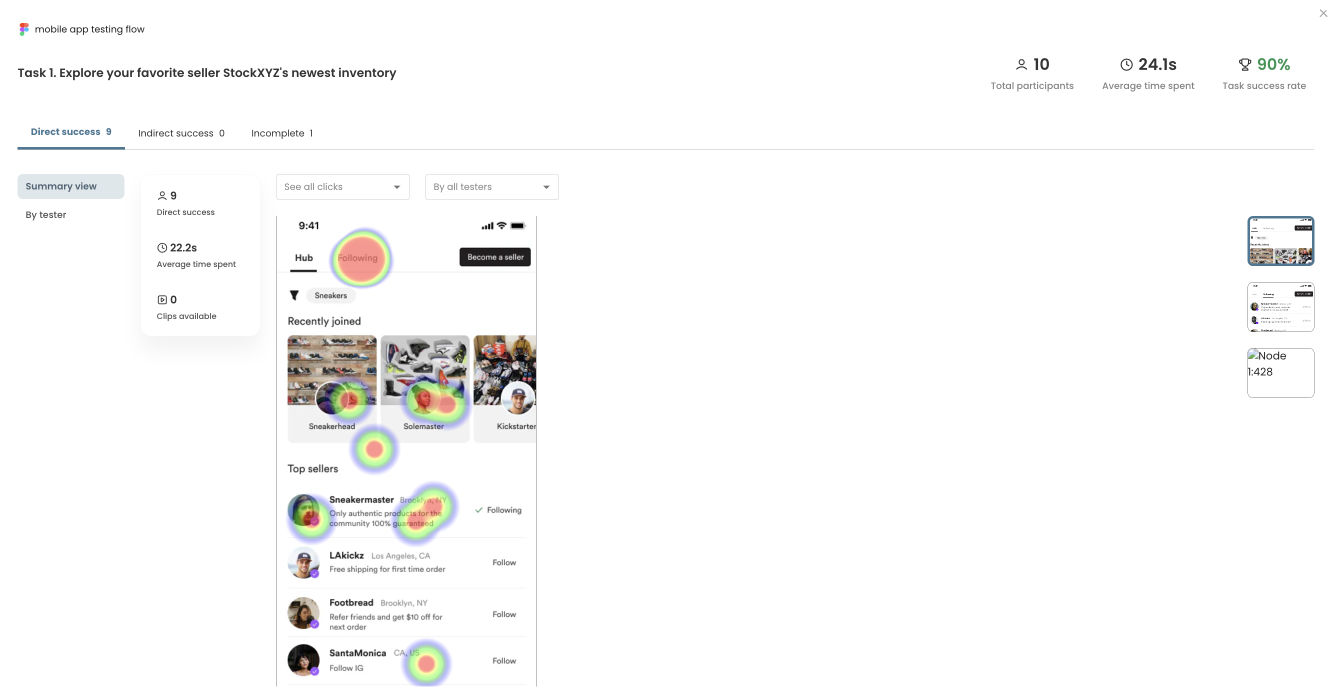

Building unmoderated tests, especially that involves Figma prototypes, requires creating relevant tasks and sub-tasks, describing the context and scenario, and defining what actions or flow are considered as a task success. If you have the ideal happy path that you expect your users take, you can define the specific flow as an expected path. As participants interact with the prototype, their clicks and interactions will be recorded and be visualized in the results page as a heat map.

A/B Split Testing

A/B testing is a research technique useful for comparing two or more versions of the design variations to evaluate how one performs relative to the other ones. In Hubble, you can setup A/B testing with unmoderated studies using the combination of randomizer, prototype tasks, and image preference questions.

For a step-by-step guide on designing A/B testing, refer to the How can I run A/B testing or split testing? The design involves essentially including two prototype designs in the study and asking relevant follow-up questions. Image preference question is a quick way to gauge preference.

IA Testing: Card Sorting and Tree Tests

Card sorting is a research method that helps uncover users’ mental models and preferences for organizing information. Card sorting provides insights into better structuring the content of your website as participants categorize a set of cards or topics into groups that make sense to them.

With Hubble, you can run both open and closed card sort studies by inserting Card sort task option. Once added, you can customize the card sort to add a number of card items, shuffle them, and decide whether to use a open or closed card sort.

For a more detailed step-by-step, see use card sorting to have testers categorize information.

Hubble lets you also conduct tree tests: Tree testing helps evaluate how easily users can find information by navigating your site’s structure. It’s useful after you’ve established a draft information architecture and want to validate whether users can locate content effectively. To learn more about when to use card sorting vs. tree testing, we have a dedicated blog article.

Live Website Testing

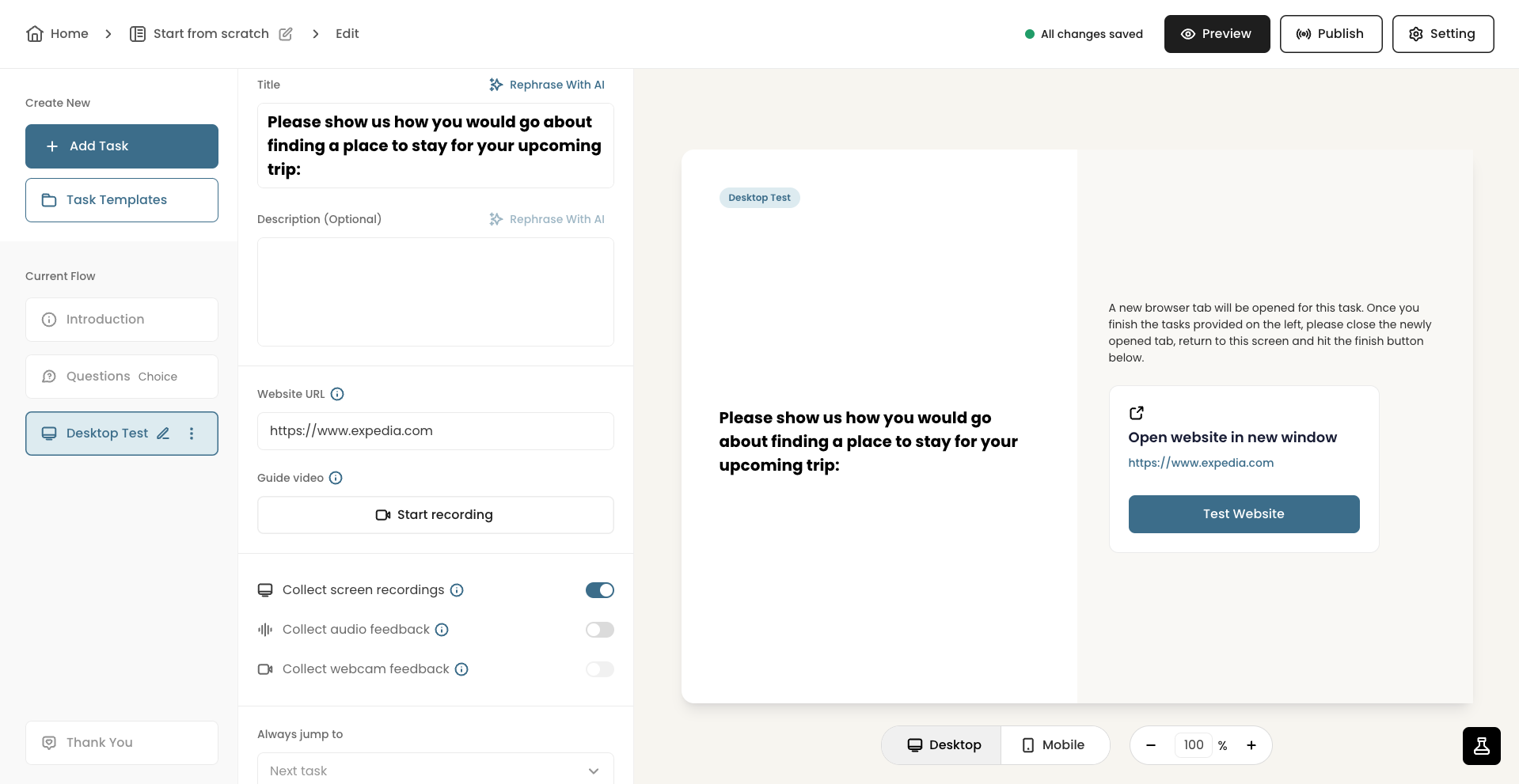

A live Desktop Test allows you to observe how users interact with your live website or app on a desktop device. Participants complete real tasks in their natural environment, helping you uncover usability issues, broken flows, or friction points in real-world conditions.

Desktop Live Testing is ideal for evaluating how users interact with fully functional, live websites or product pages. Since everything is working as it would in the real world—links, navigation, and dynamic content—you can observe natural user behavior, catch unexpected issues, and validate if your live experience aligns with user expectations. In contrast, prototype testing is better suited for earlier stages, where designs aren’t fully built and you’re testing flows or layouts before development.

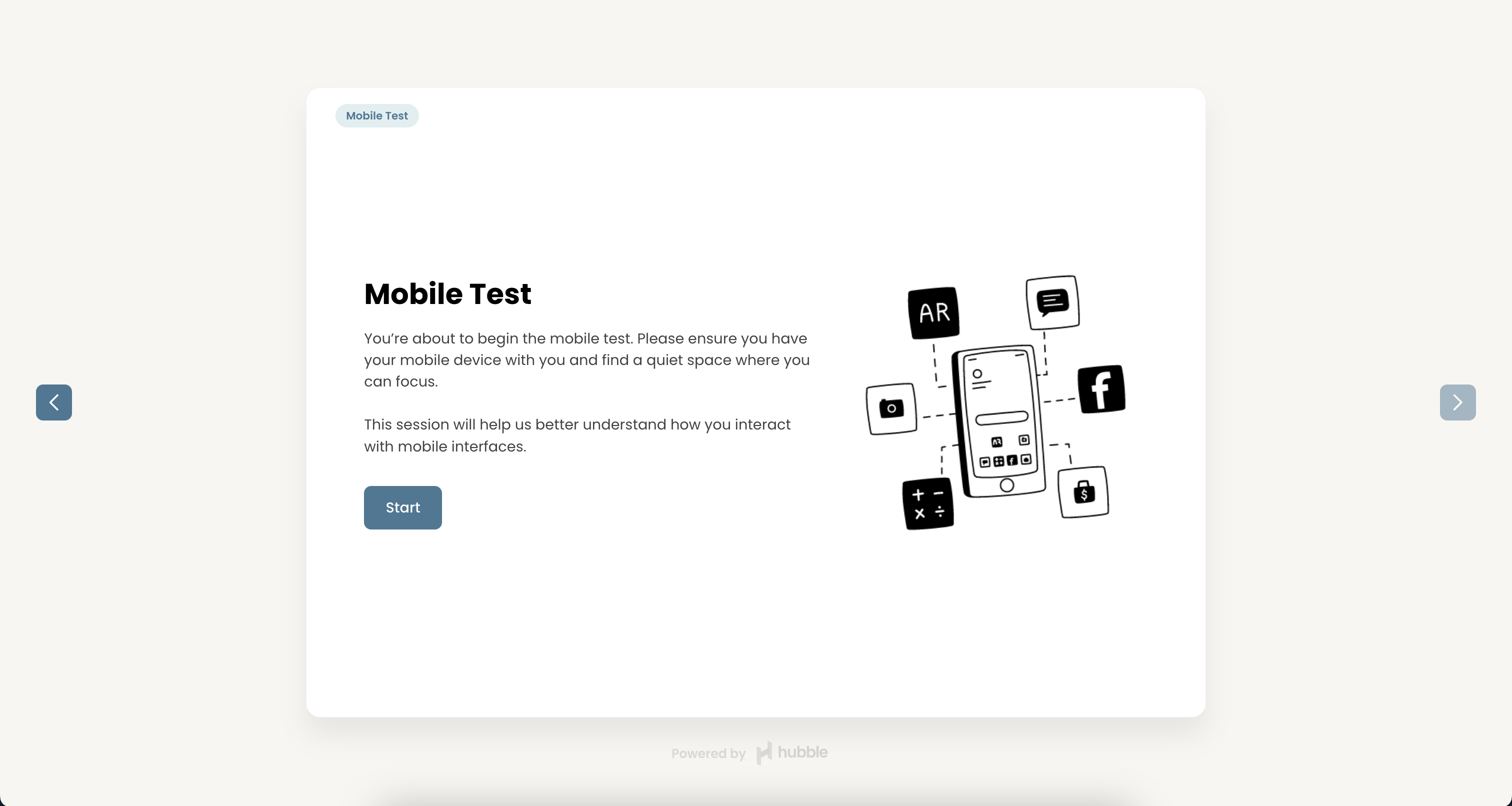

Mobile Tests

Mobile Testing helps you evaluate how users interact with your live mobile website or app on actual devices. Since users are navigating the fully built experience, you can observe real behaviors, uncover friction points, and ensure key actions work smoothly across different screen sizes, especially on their mobile devices.

The Mobile Test task is only available on mobile view, which requires participants to download our Hubble mobile app so we can better capture video, screen, or audio as they go through the mobile tasks.

First Click Tests

First Click Testing is a usability testing technique that focuses on the initial interaction users have with a website, application, or interface. It aims to evaluate the efficiency and intuitiveness of the design by honing in on the pivotal first click. This methodology is rooted in the understanding that the first interaction often sets the tone for the entire user experience.

According to research, "if the user's first click was correct, the chances of getting the overall scenario correct was .87." Making the correct first click affects the overall task success rate—Clicking on the right button led to an overall 87% task success rate. On the other hand, clicking on the incorrect button resulted in 46% task success rate.

In Hubble, the results page visualizes the participants’ interactions through heat maps. Hubble’s unmoderated study module will automatically generate heat maps and click filters to facilitate your data analysis process. With their first clicks and other subsequent clicks visualized as a heat map, you can identify potential design issues and make iterative improvements.

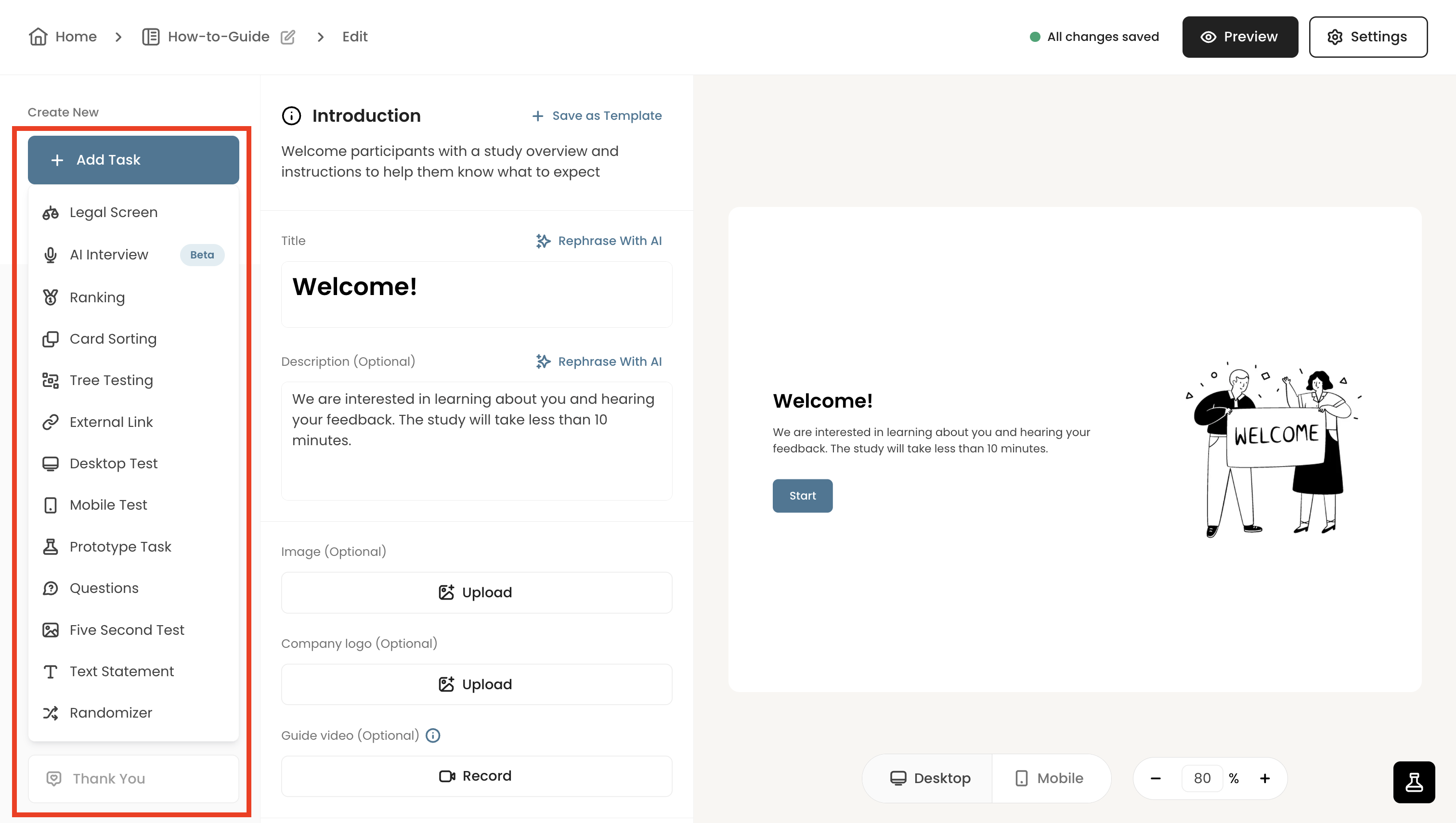

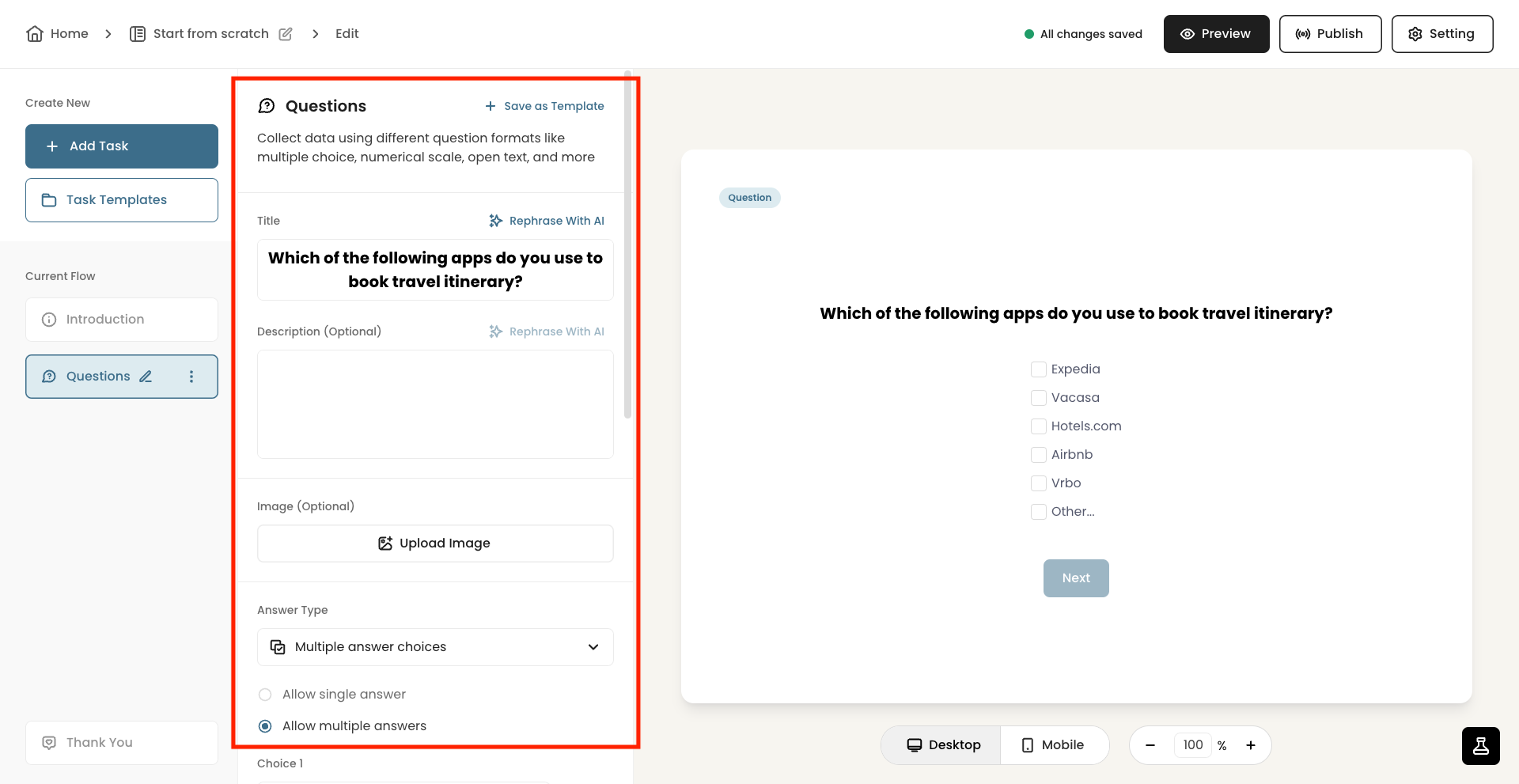

Designing Unmoderated Studies

In Hubble, you can design and customize studies by using various question types for in-product surveys and unmoderated usability tests. On the very left column panel, you can manage the study flow by adding, removing, and reordering task questions.

Once a question is added, you can edit the details in the second column panel. As you build the study, the preview is displayed on the right half of the screen. Make sure to preview the study so that the study appears as you intend it to be.

Once you add a task, the 2nd left panel lets you enter question details, like titles, additional details, and set different options. You will be able to see live preview of how the study appears as you edit the question details.

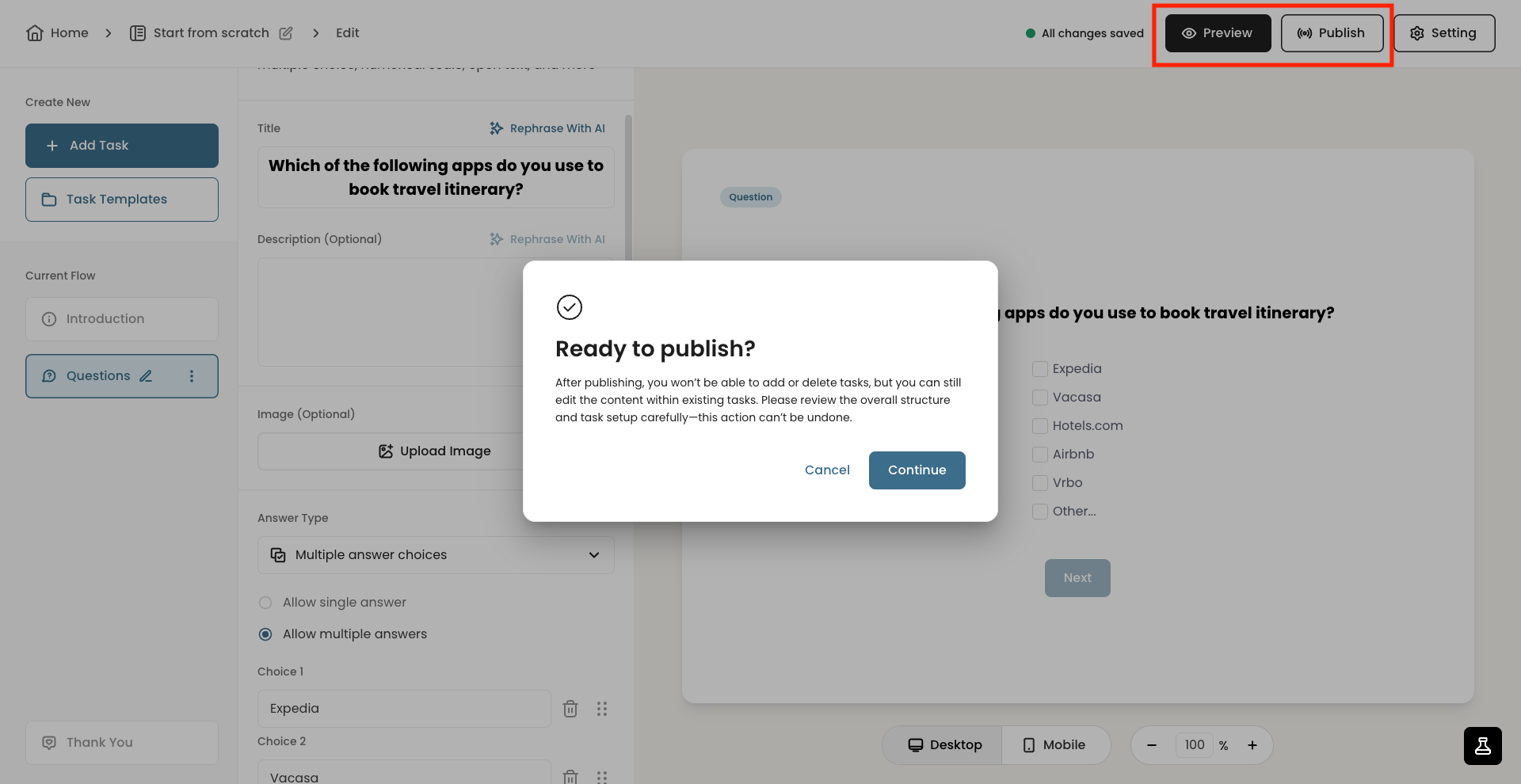

Once you are done building the study, you can preview the study in full screen or publish the study.

Types of Unmoderated Study Tasks

Unmoderated studies are the core of Hubble, offering a variety of research methods and question types that allow you to scale research easily without live moderation. Below are brief descriptions of the available tasks.

- AI Interview: lets AI naturally converse with participants. You can provide a set of instructions or specific discussion guide to fill in additional context for the AI.

- Legal Screen: allows you to attach NDA documents via link or text. Participants won't be able to proceed with the study unless they agree to the terms.

- Ranking: asks respondents to order items based on preference or importance, helping you understand priorities or comparative preferences.

- Card Sorting: Displays an interface for running card sort questions. Card sorting is especially helpful when understanding users’ mental model and information architecture.

- Tree Testing: helps you evaluates how easily users can find information by navigating a simplified text-based version of your site’s structure, helping identify navigation or labeling issues.

- External Link: lets you insert external links to the study for users to click to. An example includes linking NDA document, product websites, or additional survey links.

- Desktop Test: allows you to have participants open a live app on desktop to complete tasks while video, screen, or audio is recorded.

- Mobile Test: lets you run usability test natively on participants' mobile devices. Participants will download our app to participate in mobile tasks.

- Text Statement: shows a page with text including title and paragraph. This form is useful for introduction and providing additional information or guidance.

- Prototype Task: Allows you to load Figma for prototype testing. You can set multiple "steps" or mini-tasks within a single prototype task.

- Five Second Test: lets you present a static image for a set amount of time ranging from 1 to 60 seconds.

- Randomizer: is a utility task that lets you randomize the order of the questions so that you can counterbalance and randomize the presentation of the question orders.

The Question Task provides question forms that can be customized on your needs. Below are the different options:

- Multi Answer Choices: Shows a number of options to be selected from. This option includes both single select and multi-select options

- Numerical Scale: provides a visual numerical scale for users to select. You can further customize the low and high value of the scale.

- Star Rating: shows a visual star rating option for users to respond to.

- Emoji Rating: is a simple and yet intuitive way to gauge emotional response from users. It provides the options to specify emojis and their descriptions.

- Open Text: provides a text field for users to respond to and elaborate on. This option is frequently used to follow-up to collect additional qualitative data.

- Matrix Selection: allows participants to rate or evaluate multiple items using the same set of answer options, making it efficient to gather feedback across several factors in a consistent format.

- Video Response: allows participants to record their video or audio to respond to a particular question. This is useful if you want to design a semi-unmoderated interview style study.

- Yes or No Question: provides a closed yes/no question for users to select.

- Image Preference: allows you to upload multiple images and have users select from.

In-product Survey and Intercepts

Aside from traditional surveys, in-product surveys or intercepts allow you to attach a survey modal to pop-up while real users engage with your web app. Below are some key use cases especially when you want to gather contextual, real-time feedback directly within your product:

- Trigger surveys when users abandon signup, checkout, onboarding, or key moments.

- Measure feature satisfaction by prompting a survey after a user interacts with a newly launched feature.

- Collect bug reports and user feedback using open-text responses.

- Gauge post-task or post-interaction feedback after a user completes a key user task.

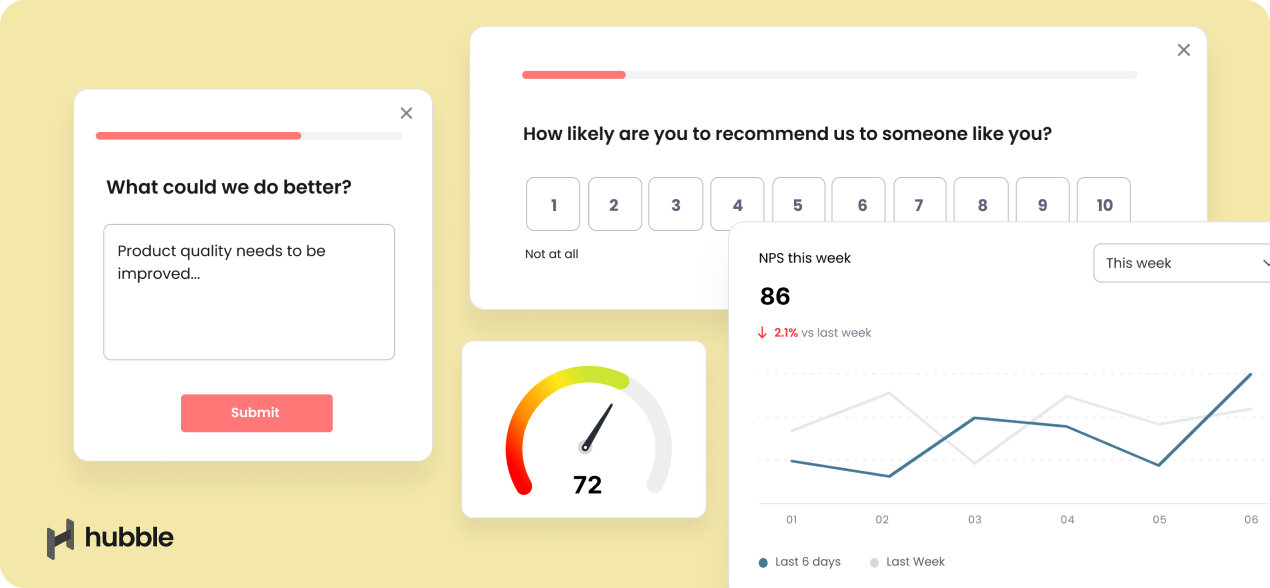

- Gather Net Promoter Score (NPS) or general satisfaction to track user sentiment over time.

- Segment-specific feedback by tailoring questions to specific user type or user journey.

Building in-product surveys is similar to designing unmoderated tests. While the available question types may vary slightly, they generally follow the same formats, such as multiple choice, emoji ratings, numerical scales, star ratings, and open-text responses. Keep in mind that the survey modal appears as a small overlay on the screen, so it's best to keep text concise due to space limitations.

- Please see our step-by-step guide on launching in-product surveys in this article.

What makes in-product surveys powerful is the ability to target specific user segments in real time based on events or actions they take. When publishing a survey, you can configure one of four available trigger options to control when and to whom the survey appears.

- Page URL: You can provide a URL of a specific page where you want the survey to be prompted. Note that you can add as many URLs for a single survey.

- CSS selector: You can prompt a survey when a user interacts with a specific element within a page. For example, you can select a certain div block or CTA button to trigger the survey.

- Event: You can prompt a survey when a predefined event is triggered. Events can be flexibly defined as long as it is properly setup, but would require adding event tracking code Hubble.track(’eventName') in the source code.

- Manually via SDK API: You can prompt surveys manually displayed through the installed Hubble’s Javascript SDK. Inserting window.Hubble.show(id) with the survey ID in your source code will display the survey in the specified page or timing.

To learn more about survey triggers and publishing surveys, please refer to the article.

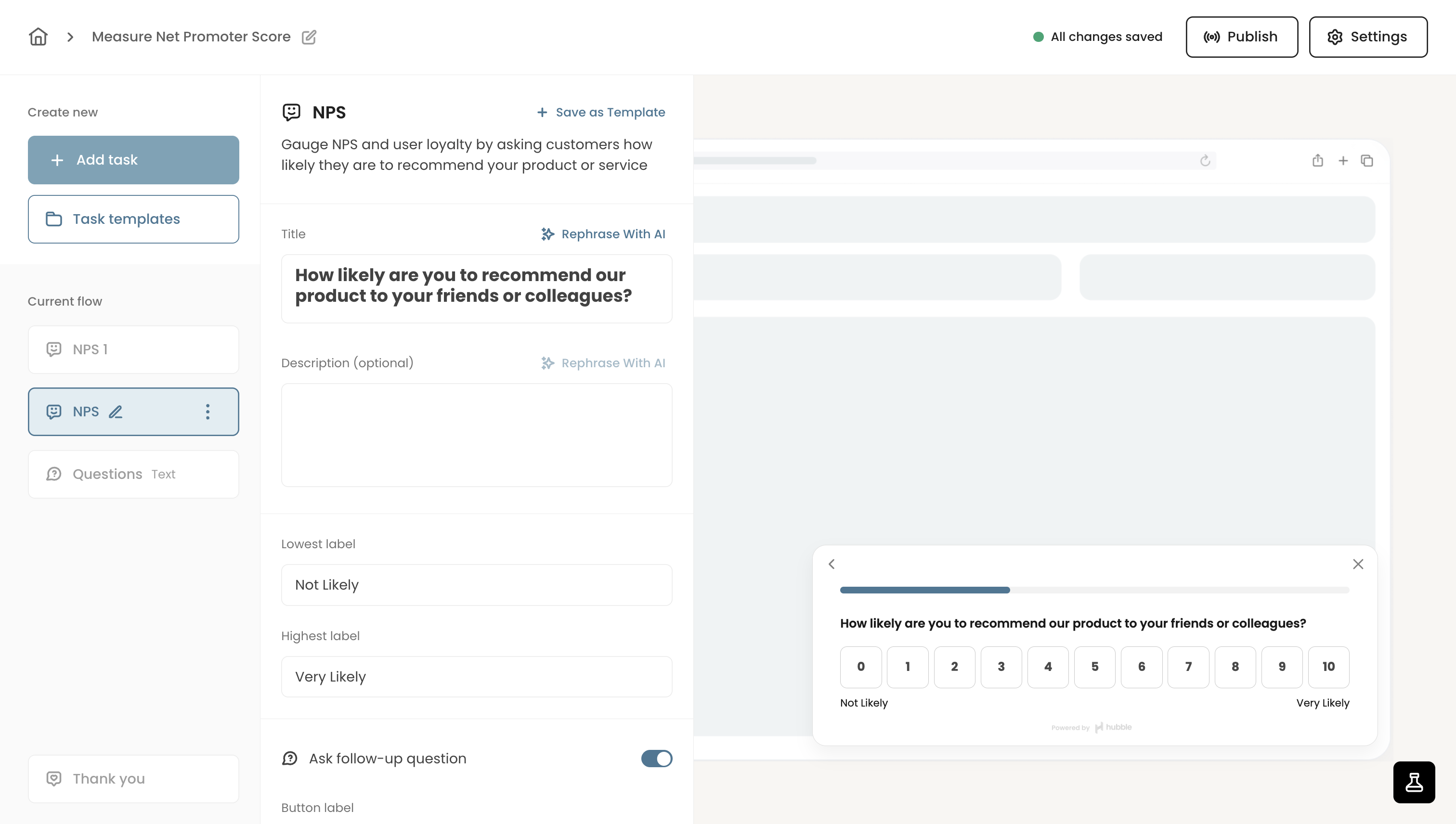

Net Promoter Score (NPS)

Net Promoter Score (NPS) is a simple yet powerful tool for gauging customer loyalty and identifying opportunities for improvement. In survey projects, you can simply add NPS option to setup a NPS study, which will open a predefined template.

For a step-by-step guide on setting up NPS survey, refer to the article How to setup Net Promoter Score (NPS) survey.

In the results page, NPS score is automatically calculated by identifying the ratio of promoters, passives, and detractors along with the score distribution. For making better sense of NPS results, we have a dedicated article Making sense of Net Promoter Score (NPS).

Data Collection and Participant Recruitment

Once a study is live, you now you need to collect data. There are two ways to collect data. You can either use your internal pool of participants if you already have participants to reach out. Or, you can utilize Hubble's participant recruitment, where we partnered with Respondent and UserInterviews, to reach out to millions of participant pool. It helps you shorten research turnover by quickly gathering participants that are good fit to the study as quick as 30 minutes.

Once a study is live, it’s time to collect data. There are two ways to do this: you can either invite participants from your own internal pool or use Hubble’s participant recruitment service.

We’ve partnered with Respondent and User Interviews to give you access to millions of potential participants, helping you quickly find the right people, often within 30 minutes. With unmoderated testing and streamlined recruitment combined, Hubble makes it easy to scale your research efficiently.

To learn more about how participant recruitment, we have rich guides to get you started:

Collaboration in Hubble

Collaboration is key when conducting user research, and Hubble makes it easy to invite collaborators and team members. Involving key stakeholders early increases visibility into ongoing projects and fosters stronger teamwork. Hubble offers several ways to keep your team aligned and in sync throughout the research process:

Inviting team members to workspace

Once you are logged in to your workspace, you can add and manage collaborators by selecting Settings > Team tab > Under Team Members section.

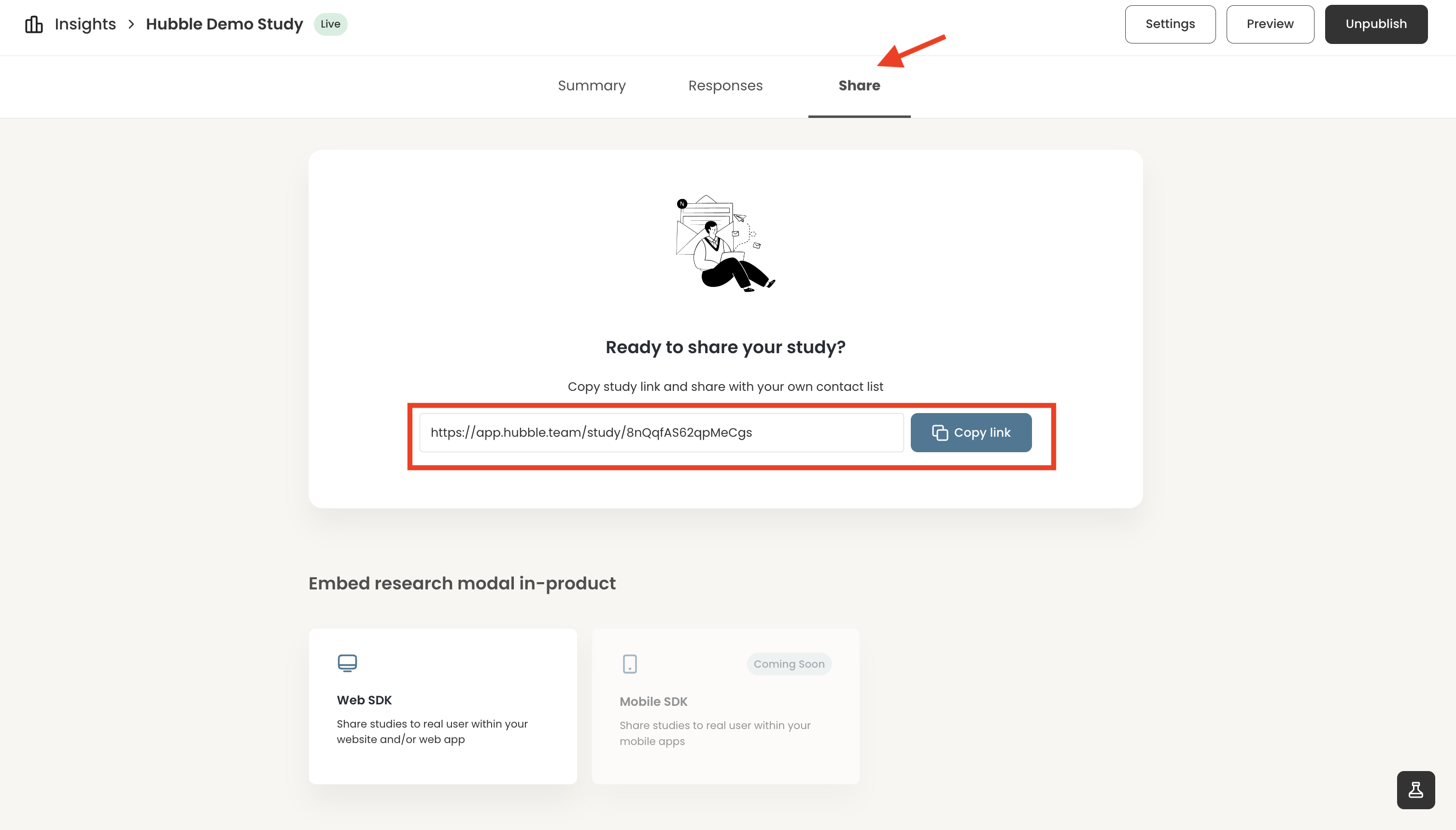

Preview studies and sharing published studies

Even if some team members aren’t part of your workspace, you can still share study progress and collect feedback by sending them the study preview link.

Once a survey or unmoderated study is published, the public link is available in the Share tab of the project page. You can use the URL to distribute the survey to participants or your team members.

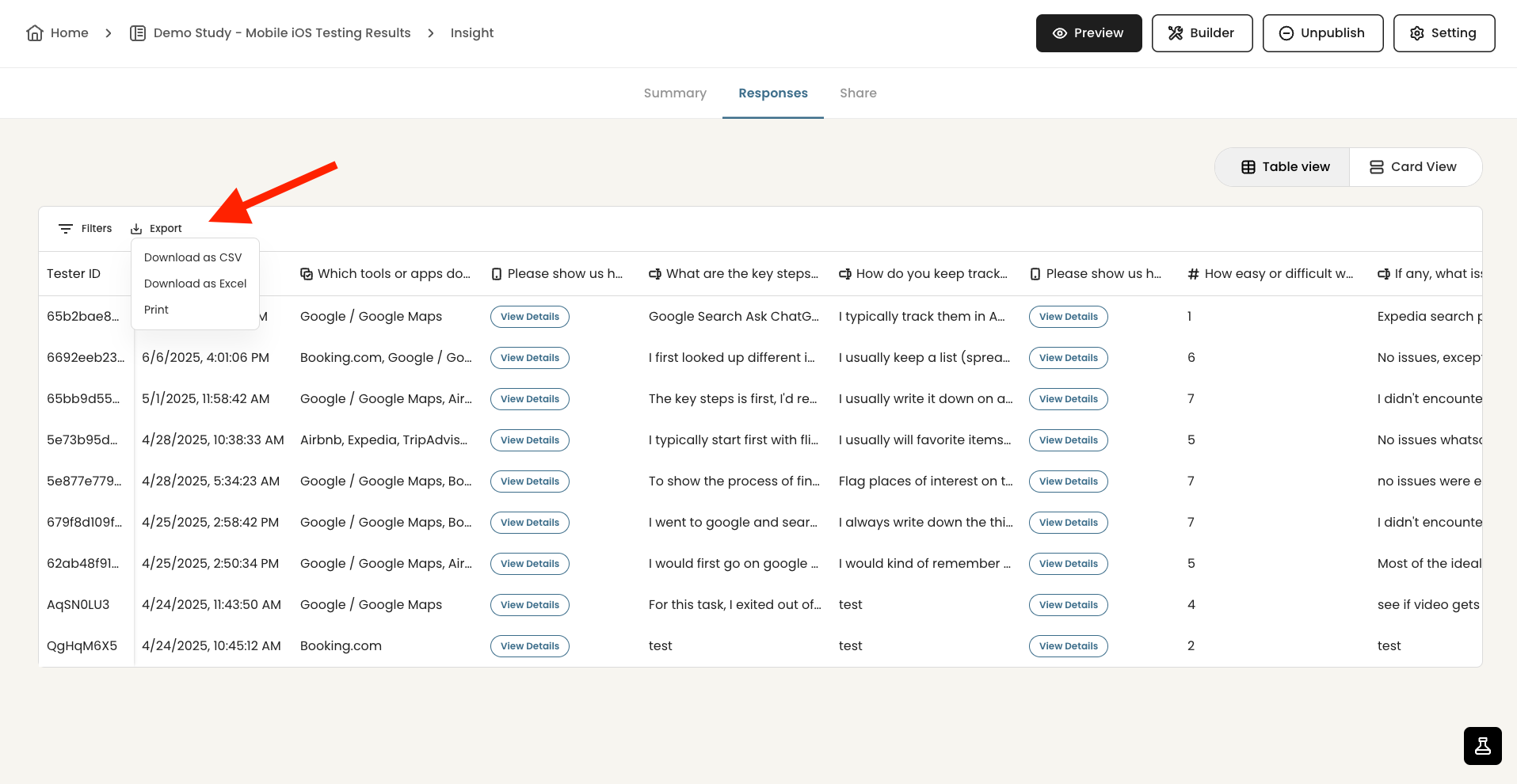

Exporting study results

As study responses are submitted, the data will become immediately available for review. The Summary tab displays a summarized view of all the responses that are submitted.

In the Responses tab, you can review individual submissions in a table view. For a closer examination or to share with others, you can export the table as a CSV file.

Maximizing Research ROI with Hubble

Below outlines a few approaches to maximize the use of Hubble for your research efforts:

1. Establish lean research practice in small increments

User research and collecting product feedback are not just a one time thing. Just as a product needs to be constantly developed and evolved to suit user needs, user research should be a continuous effort, closely involving users in the product development.

With in-product surveys and unmoderated studies, Hubble allows you to easily setup, design, and launch studies. Running iterative studies in small scale allows flexibility for failures as you can diagnose what works and what doesn’t early on in the development cycle. Moreover, if you are running usability studies, more than 80% of the usability problems are found with the first five participants. There is a significant diminishing return for identifying usability problems with additional participants. Instead of running a large scale study with 10+ sample-sized moderated studies, it is highly recommended to run a series of studies with design iterations and small number of participants.

- For integrating Jobs-to-be-Done Framework, see our overview guide.

- To learn more about key usability metrics to track, see our comprehensive guide.

- For more on evangelizing research at your organization, see our guide Establishing your UX research strategy.

2. Engage with real users through in-product surveys

Collecting real, contextual feedback can be challenging especially when scheduling participants for studies can take weeks only to have them retrospect and rely on memory to share their experience using a certain product.

With in-product surveys, you can immediately follow-up with users for feedback by triggering the survey modal with specific events or flows that users go through. This approach not only helps you evaluate how the current product is doing, but you can also maintain user engagement and satisfaction by demonstrating that you value user input and are actively seeking ways to improve the product based on their feedback.

3. Streamline research from recruiting, design, execution, and reporting

Depending on the industry or type of target users, it could be challenging to get hold of actual users or panel of participants. To help streamline your research process, Hubble provides high quality B2B and B2C participants by partnering with leading recruiting platforms, Respondent and UserInterviews.

By combining in-product surveys with a variety of unmoderated tests, you can triangulate your research data and inform product decisions based on user-driven data. Hubble enables you to run small, repeatable studies that scale with your needs by acting as a flexible research engine for ongoing user experience insights.

FAQs

Hubble is a unified user research software for product teams to continuously collect feedback from users. Hubble offers a suite of tools including contextual in-product surveys, usability tests, prototype tests and and user segmentation to collect customer insights in all stages of product development.

At a high level, Hubble offers in-product surveys and unmoderated studies. You can easily customize and design the study using our pre-built templates.

Some common research methods are concept and prototype testing, usability testing, card sorting, A/B split testing, first click and 5-seconds test, Net Promoter Score surveys, and more.

Hubble allows product teams to make data-driven decisions with in-product surveys and unmoderated studies. You can build a lean UX research practice to test your design early, regularly hear from your users, and ultimately streamline the research protocol by recruiting participants, running studies, and analyzing data in a single tool.

Hubble’s unmoderated study option allows you to connect Figma prototypes to its interface to easily load and design studies. Once your study is loaded, you can create follow-up tasks and relevant questions.

To learn more, see the guide section.